When you quote figures for enthalpy change they will have energy units of kJ. There is a mismatch between the units of enthalpy change and entropy change. That seems easy, but there is a major trap to fall in here, and if you manage to get through your course without falling into it at least once, you will have done really well! ΔH is the enthalpy change for the reaction. There is a simple equation for the entropy change of the surroundings.

#ENTROPY FORMULA HOW TO#

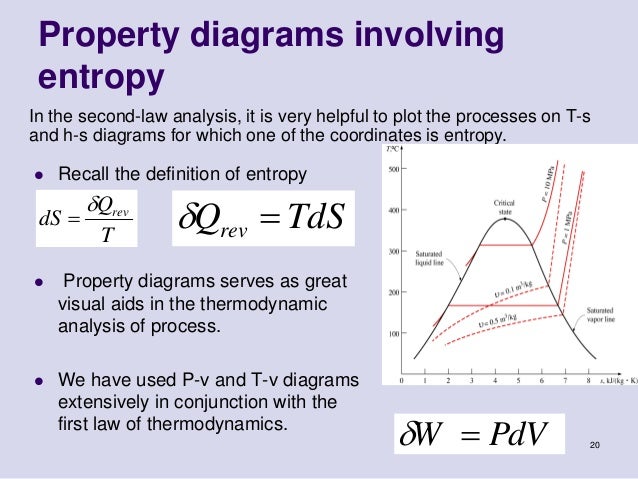

So far, you know how to work out the entropy change of the system for a given reaction if you are told the entropies of all the substances involved in the reaction. You should be able to judge what is acceptable to your examiners by looking at how this is presented in your syllabus.Ĭalculating the entropy change of the surroundings.

That is because we shall be using this equation under non-standard conditions. Note: I have deliberately left out the "standard" symbols in this equation. What matters is the total entropy change, which is the sum of the entropy changes of the system and the surroundings. An endothermic reaction will cool the surroundings, and so the entropy of the surroundings decreases. The reverse is true for an endothermic change. And so increasing the temperature increases the entropy of the surroundings. If you add more energy to the surroundings, the number of different possibilities for arranging the energy over the molecules increases.

Heat is given off to the surroundings, and that extra heat increases the entropy of the surroundings. If you only calculate the entropy change of the reaction (the entropy change of the system), you are leaving out an important factor. If you do need to read this page, make sure you have read the page explaining how you calculate the entropy change of the system first.

#ENTROPY FORMULA FREE#

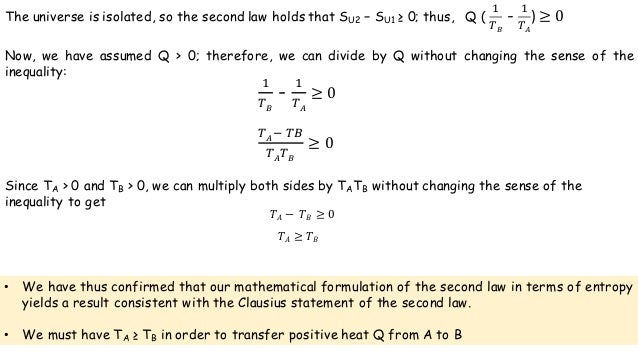

You will still probably have to be able to work out the feasibility of reactions, but that will be done by the rather less confusing use of an equation based on Gibbs free energy. Note: If your syllabus doesn't specifically mention entropy change terms like system, surroundings and total, you could safely ignore this page. It goes on to look at how you can use the total entropy change to decide whether or not a reaction is feasible. We chose s to satisfy the following inequalities.This page considers various entropy changes: of the system, of the surroundings, and the total change. The size of the domain is $ n^r $ and the size of the range is $ m^s $. The range of E must be greater than or equal to the size of the domain or otherwise two different messages in the domain would have to map to the same encoding in the range. To prove this is correct function for the entropy we consider an encoding $E: A^r \rightarrow B^s$ that encodes blocks of r letters in A as s characters in B. In this case the entropy only depends on the of the sizes of A and B. And if event $A$ has a certain amount of surprise, and event $B$ has a certain amount of surprise, and you observe them together, and they're independent, it's reasonable that the amount of surprise adds.įrom here it follows that the surprise you feel at event $A$ happening must be a positive constant multiple of $- \log \mathbb \log_m(n)$$ It's reasonable to ask that it be continuous in the probability. How surprising is an event? Informally, the lower probability you would've assigned to an event, the more surprising it is, so surprise seems to be some kind of decreasing function of probability.

0 kommentar(er)

0 kommentar(er)